#1. A Lack of Indexes

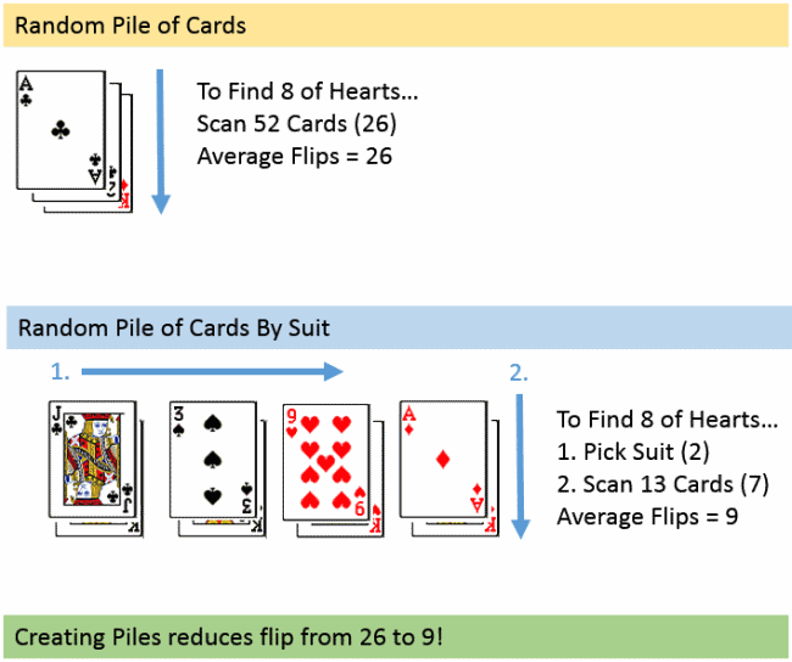

Database indexes let queries to efficiently retrieve data from a database. These indexes work similarly to the index at the end of a book: You can look at 10 pages to find the information that you need rather than searching through the full 1,000 pages.

How Indexes Increase Efficiency – Source: Essential SQL

You should use database indexes in many situations, such as:

- Foreign Keys – Indexing foreign keys lets you to efficiently query relationships, such as a “belongs to” or “has many” relationship.

- Sorted Values – Indexing frequently used sorting methods can make it easier to retrieve sorted data rather than relying on “group by” or other queries.

- Uniqueness – Indexing unique values in a database enables you to efficiently look up information and instantly validate uniqueness.

Database indexes can dramatically improve the performance of large and unorganized datasets, but that doesn't mean that you should use it for every situation. After all, database indexes are separate tables that take up additional space and require special queries to access – it’s not a free operation by any means.

#2. Inefficient Querying

Most developers aren't database experts and the code they write can lead to significant performance issues. The most common problem is the so-called N+1 query, which is a common cause of insidious performance bottlenecks in web applications.

For example, suppose you want to query the database for a list of items.

You might write the following iterator in PHP:

The problem with this approach is that you're executing many separate queries rather than combining them into a single query. It's much faster to execute one query with 100 results than 100 queries with a single result.

The solution is batching queries:

N+1 queries can be difficult to detect because they don't creep up until you have a lot of queries. You may be testing on a development machine with only a handful of queries without an issue, but as soon as you push the code to production, you could see dramatic performance issues under production loads.

#3. Improper Data Types1

Databases can store nearly any type of data, but it's important to choose the correct data type for the job to avoid performance issues. For instance, it’s usually a good idea to select the data type with the smallest size and best performance for a given task.

There are a few best practices to keep in mind:

- Size – Use the smallest data size that will accommodate the largest possible value. For example, you should use `tinyint` instead of `int` if you're only storing small values.

- Keys – Primary keys should use simple `integer` or `string` data types rather than `float` or `datetime` to avoid unnecessary overhead.

- NULL – Avoid NULL values in fixed-length columns since NULL consumes the same space as an input value would. These values can add up quickly in large columns.

- Numbers – Avoid storing numbers as strings even if they aren't used in mathematical operations. For example, a ZIP code or phone number integer is smaller than a string.

There are also several complex data types that can add value in certain circumstances but come with gotchas.

For example, Postgresql’s ENUM data type makes it easy to include an enumerable list in a single database item. While ENUM is great for reducing join complexity and storage space, long lists of values, or variable values, can introduce performance issues since it makes database queries a lot more expensive.

How to Spot Problems

The problem with inefficient database queries is that they’re difficult to detect until it's too late. For example, a developer may experience very little lag with a small sample database and just his local machine, but a production-level number of simultaneous queries on a much larger database could grind the entire application to a halt.

Load tests are the best way to avoid these problems by simulating production-level traffic before pushing any code to production users. While most load tests simulate traffic using protocols, the best platforms spin up real browser instances for the most accurate performance results.

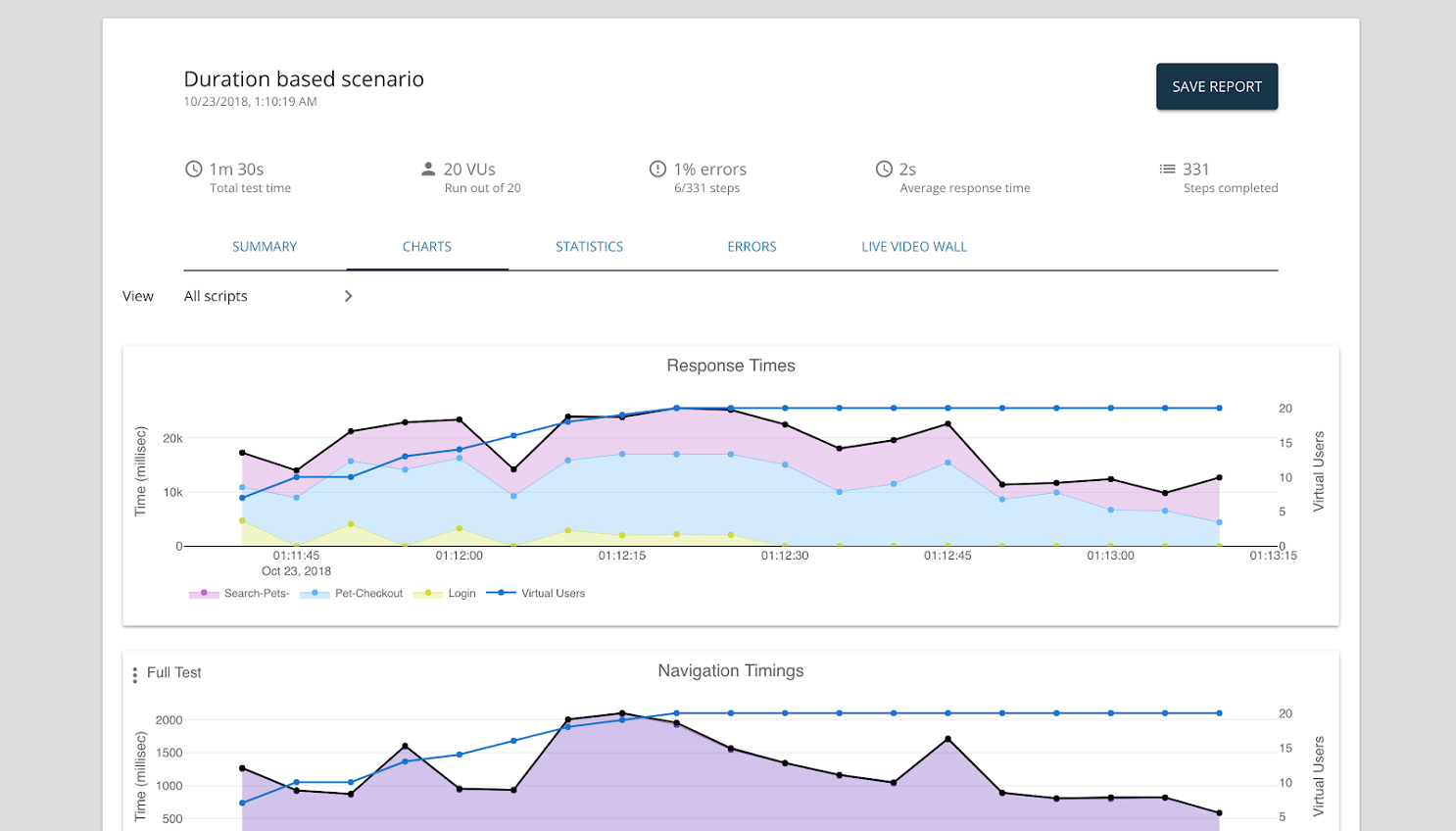

LoadNinja’s In-Depth Analytics – Source: LoadNinja

LoadNinja enables you to record and instantly replay load tests in minutes rather than hours. These tests are run across tens of thousands of real browsers in the cloud to generate a realistic load. Engineers can then access step times, browser resources, and other actionable metrics.

Sign up for a free LoadNinja trial

See how easy it is to get started with load testing today!

Load tests are only helpful if they're run in advance of a production deployment. Using a continuous integration (CI) and deployment (CD) process, you can ensure that load tests run with each merge into the master or pre-production branch well-before the final push to production.

It’s an Easy Fix

Database performance issues are a common cause of web application bottlenecks. Most of these problems boil down to a lack of indexing, inefficient queries, and the misuse of data types, which can all be easily fixed. The challenge is identifying them before they reach production.

Load testing is the best way to ensure database bottlenecks – and other performance issues – are identified before they reach production users. Using this solution, you can easily incorporate these tests into your CI/CD pipeline and make fixes before they become costly problems.

Sign up for a free LoadNinja trial

Make these issues non-issues